Edge Computing is reshaping how organizations handle data by moving compute power closer to where data is produced. As data volumes explode at the network perimeter, this approach enables faster, more reliable processing across industrial sensors, devices, and business apps, supporting continuous improvement and proactive maintenance across facilities. The shift offers latency reduction in critical workflows and lets enterprises design near-data processing that minimizes round-trips. The model also strengthens perimeter security by ensuring sensitive insights stay local, while only essential signals move outward. Overall, the hybrid edge-cloud model supports scalable, resilient operations by distributing compute and analytics where they are most effective, and careful governance keeps deployments manageable.

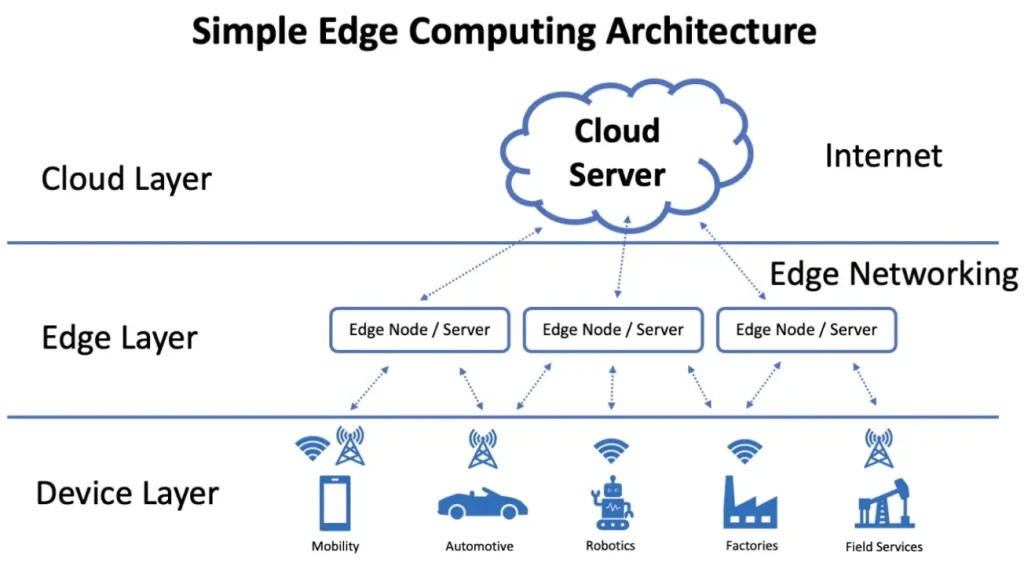

From a different perspective, this model is about near-data processing at the network boundary, where computing happens close to sensors and devices. Rather than one remote data center, organizations deploy a distributed edge fabric—comprising sensors, gateways, and compact data centers—that keeps essential workloads close to origin. This boundary-centric approach borrows from related patterns like fog computing and edge AI, delivering fast insights while keeping data local when possible. In practice, this ecosystem enables scalable deployment across sites, reduces data movement, and supports governance with localized controls.

Edge Computing for Real-Time Operations: Latency Reduction and AI at the Edge

By moving compute closer to the data source, Edge Computing delivers latency reduction and enables real-time control for devices such as industrial sensors, smart cameras, and autonomous systems. This approach aligns with IoT at the edge, where data processing and initial analytics happen locally, minimizing round trips to the core cloud and reducing network strain.

The rise of AI at the edge allows inference on devices themselves, enabling tasks like anomaly detection, computer vision, and predictive maintenance without constant cloud round-trips. This not only speeds decision-making but also reduces data movement, lowers costs, and enhances the ability to operate autonomously at the perimeter.

Edge Computing Benefits at the Perimeter: Security, Bandwidth, and Resilience

The edge delivers clear edge computing benefits by processing data locally, which significantly reduces bandwidth needs and preserves network resources. Only essential summaries or events are sent upstream, while raw data can remain at the source when appropriate, a model that also supports perimeter security through tighter local control and governance.

Resilience and uptime are strengthened as edge workloads continue operating with intermittent connectivity and offline capability, synchronizing with the cloud when a stable link returns. This setup enhances perimeter security by limiting exposure of sensitive data and enabling stricter access controls at the network edge, while maintaining reliable operations across distributed sites.

Frequently Asked Questions

What are the core edge computing benefits for IoT at the edge, and how does latency reduction enable real-time applications?

Edge Computing relocates compute and analytics to the network edge, delivering edge computing benefits such as lower latency, bandwidth optimization, and improved resilience. For IoT at the edge, this latency reduction enables real-time monitoring and immediate responses at the source, reduces cloud transport costs, and helps preserve data privacy by local processing when needed.

How does AI at the edge enhance perimeter security and enable faster, autonomous decisions in edge computing deployments?

AI at the edge enables on‑device inference and local analytics, reducing data sent to the cloud and strengthening perimeter security. By running models at the edge, organizations can detect anomalies, enforce access controls, and make fast autonomous decisions, even with intermittent connectivity, improving overall edge security and reliability.

| Key Area | Summary | Examples | Primary Benefit |

|---|---|---|---|

| What Edge Computing Is | Moves compute, storage, and analytics closer to data sources at the network perimeter; enables a hybrid edge-cloud model. | Factory floor robots, smart cameras, industrial sensors | Lower latency; faster, near-real-time insights; reduced bandwidth |

| Core Concepts & Architecture | Edge devices, gateways, and micro data centers form a distributed fabric that coordinates with central cloud services. | Edge devices, edge gateways, micro data centers | Scalability and flexible deployment across sites |

| Why Proximity Matters | Processing at the source enables instant responses, latency savings, and supports data privacy and sovereignty. | Factory robots adjusting parameters; smart city traffic rerouting | Real-time decisions; improved privacy controls |

| Benefits | Latency reduction, bandwidth optimization, resilience, security, and localized intelligence. | Various deployments across manufacturing, health, retail | Operational efficiency; lower costs; enhanced security |

| Architecture & Data Flow | Data flows from edge to gateway to micro data centers or cloud; local processing with orchestration to keep edge workloads aligned with goals. | Edge preprocessing, local actions, cloud coordination | Up-to-date models and coordinated workloads across sites |

| AI at the Edge | Inference on edge devices or gateways enables tasks like anomaly detection, computer vision, and predictive maintenance; fog computing adds intermediate processing layers. | Edge AI models; fog layers | Faster decisions; reduced data movement; privacy benefits |

| Industries & Use Cases | Manufacturing, transportation, healthcare, and retail leveraging edge intelligence for real-time outcomes. | Predictive maintenance; real-time routing; patient alerts; personalized retail experiences | Improved uptime; safer, more responsive services |

| Best Practices & Governance | Workload partitioning; security-by-design; data governance and privacy; interoperability; reliability; power considerations. | Edge deployment patterns; containers, orchestration | Reliable, secure, scalable edge deployments |

| Future Trends | AI accelerators at the edge; 5G and beyond-5G; federated learning; edge-cloud collaboration. | Edge devices with AI accelerators; federated learning | Smarter, privacy-preserving models; expanded perimeter capabilities |

Summary

Edge Computing is a practical, scalable approach to bringing computation and intelligence closer to the data source. By distributing workloads across edge devices, gateways, micro data centers, and the cloud, organizations can achieve lower latency, better bandwidth efficiency, stronger security, and greater resilience. As the perimeter becomes smarter and more capable, Edge Computing will continue to power a wide range of applications—from industrial automation and smart cities to healthcare and retail—unlocking faster insights and more responsive services. Embracing this paradigm will help organizations stay agile, competitive, and ready for the next wave of digital transformation.